- Drupal/xcart to wordpress/Woocommerce

- Magento to WordPress/Woocommerce

- OSCommerce to WP/Woocommerce

Using database and text utilites for migration/development research

The best way to migrate a site from one platform to another is to use the tools and utilities designed for import export. Sometimes you get a situation where that won’t work any longer due to customizations, extreme legacy, or many other reasons. Or becuase there are no import/export tools available. Maybe the best prospect for getting the data is using wget recursively, then parse the html and do something with it.

wget –mirror https://example.com

for i in `find "pattern that matches your files"`; do php your-custom-script.php "$i"; done

The above would likely be tricky to get right.

One project I worked on involved migrating a site from Drupal to WordPress. It was a big site with an x-cart ecommerce site, and thousands of posts and images, along with tags and categories. Most development boils down to some variation of the same formula:

- Define problem

- Come up with a solution

- Code it

- Run it/test it

- Debug it

- Document it

The solution that we came up with was to loop over the source database, parse the posts out and insert them into the target database, retaining src_id in the target database. Keeping the legacy id allowed us to comb over the system as much as necessary to get all the relevant connections, like tags, categories, orders, customers.

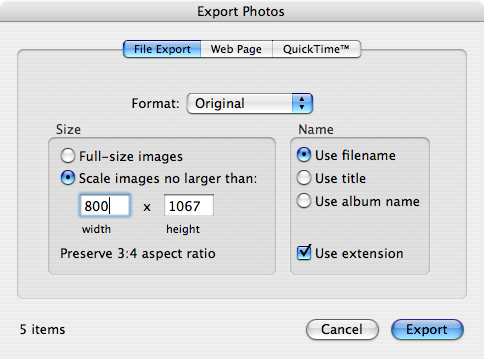

Here is an important development technique for researching or reverse engineering how cms code writes to the database. Using the mysql console app, I investigated by running queries to show tables inside the database and to describe the individual tables. This process looks at table names and describes the details of that table:

This goes a long way, but it’s also nice to use grep. You can use mysqldump to get a text file of the database and use grep or vim to search that with regular expression. Two nice ways to use mysqldump for schema research are:

mysqldump database_name --skip-extended-insert > database_name-`date +%F`.sql

That command results in all the data from database_name dumping into a text file named database_name-2021-09-23.sql with a line for each record, rather than default of mysqldump fitting as many rows as it can into a 5k character long line. Be aware that skipping extended insert can take a while for a large dataset.

And:

mysqldump database_name --no-data > database_name-schema-`date +%F`.sql

Which will ignore the data and just write the table definitions to a similarly name schema file.

Once you have the the database in text format, you can use text utitlities like grep to search it. So if you have a post with a unique classname in it, to find where the system stores the classname, you can do ‘grep unique-class-name databasename-2021-09-23.sql’ and get the field name and table that contains it, then search code for where that field is updated.

You could also use the mysql information_schema database which might be more convenient, like this example query:

SELECT TABLE_SCHEMA, COLUMN_NAME, DATA_TYPE FROM COLUMNS WHERE COLUMN_NAME LIKE '%field-search-string%';

Sql looks so shouty! It’s actually not case sensitive, you can type it i n lower case and it will work, but the convention is to do sql keywords uppercase for readability and follow the case of the database, table, and field as they were created.

Commandline Scripting

Migration scripting is a very effective use of the command line. This bypasses the webserver’s timeouts and memory limits, though it can effectively block the server, so know what you are doing before you do migration in production environemnt.

For your script, the top line should be this or something like it, so that the script only runs via command:

<?php if ( ! defined( 'PHP_SAPI' ) || 'cli' !== PHP_SAPI ) die( header( 'Location: /' ) ); ini_set( 'display_errors', 'On' ); ini_set( 'implicit_flush', 'On' ); // use this later to see how long script runs. Note that this is only fun when the script takes a long time. $time_start = time(); include '../wp-load.php';

Commonly the scripts have to run from inside the web dirctory structure to take advantage of app functionality, but of course it is important for security that they are not accessible from the internet.

I like to grant permissions on the source_db to the webserv user of the target_db as set in wp-config.php. This configuration makes it convenient to pull data from the source_db and use whatever WordPress hooks and filters you need. The last line above includes the database connection, and since we’ve granted permission on the source_db to the target_db user, we can use that to run queries on both databases.

Your script should be harmless if you just run it witout options. I like to add a usage() function to show opts, then in the script parse the options and and do the right thing.

That part might look something like this:

// runs and returns current options as specified on command line and verified against allowed values

function script_parse_args() {

// each opt is a single character. The colon marks it as requiring a value. -h doesn't need an arg. -p does need an argument

$short = 'p:hn';

$long = array(

'post_type:',

'help',

'no-action',

);

// get the cli params

$raw = getopt( $short, $long );

$out = array();

// post_types

$allowed = array( 'shop_order', 'wc_user_membership', 'wc_membership_plan', 'tribe_events', 'tribe_venue', 'page', 'product', 'order', 'user', 'video', 'teacher');

if( empty( $raw ) or array_key_exists( 'h', $raw ) or array_key_exists( 'help', $raw ) ) {

die( show_help() );

}

if( array_key_exists( 'p', $raw ) || array_key_exists( 'post_type', $raw ) ) {

// handle

if( array_key_exists( 'p', $raw ) && in_array( $raw['p'], $allowed ) ) $out['post_type'] = $raw['p'];

if( array_key_exists( 'post_type', $raw ) && in_array( $raw['post_type'], $allowed ) ) $out['post_type'] = $raw['post_type'];

}

if( array_key_exists( 'n', $raw ) || array_key_exists( 'no-action', $raw ) ) $out['test'] = 1;

return $out;

}

The help/usage function that runs if there are no arguments or if -h –help is requested is this:

// Function shows the command line switches, cli switches

Function usage() {

$msg = "\tScript uses wp-cli for some features, media import in particular.

Imports posts of post_type from src database to the current database assuming script is called from within wp install

Options: -n --no-action, -h --help, -p --post_type,

-h | --help show usage function

-p | --post_type the wordpress post_type to be imported

-n | --no-action Test mode, doesn't write anything to database

";

echo $msg;

}

The basic plumbing of the script handled, we still need to do something with it. The main structure I settled on is to select a group of posts from the source_db and do a group of actions to them by loopiing over them. Like this pseudo-code:

// $condition could be anything -- id_field > 1000? start_date < $certain_time?

$sql = "select * from src_table where 1 and $condition";

if( !empty( $sql ) ) {

$posts = $wpdb->get_results( $sql );

$i = 0;

foreach( $posts as $post ) {

$i++;

do_post( $post );

// don't need incrmental hundreds count on --no-action -n test

if( !array_key_exists( 'test', $opts ) ) {

if( $i % 100 == 0 ) echo "\n$i of $cnt \n";

}

}

$time_end = time();

$elapsed = $time_end - $time_start;

if( $elapsed > 60 ) {

$took = ( $elapsed / 60 ) . " minutes";

}

echo "\nTook: $elapsed seconds";

echo !empty( $took ) ? ", $took\n": '';

echo "\n\ntype: {$opts['post_type']} i: $i\n";

echo "done\n";

}